Towards Immersive Virtual Courts, Moving Beyond the Status Quo: Feedback from experiences conducted in the Cyberjustice Laboratory

Aurore Troussel-Clément – Research Assistant Cyberjustice Laboratory and PhD student Université de Montréal

Introduction

Between October and December 2022, demonstrations and technical tests of immersive virtual hearings took place at the Cyberjustice Laboratory at the University of Montréal and at the Harvard Visualization Lab. These experiences seek to better explore the capabilities of immersive virtual court platforms and to improve the virtual hearings’ experience and environment through the testing of these platforms.

During the pandemic, many courts and tribunals around the world had to conduct hearings fully online. The National Center for State Courts of the United States found that 70% of respondents (e.g., judges, clerks, lawyers, etc) of a study on virtual hearings reported using videoconferencing services at least once during the pandemic.[1] The OECD highlighted that during the pandemic “At an unparalleled speed, many courts around the world have started transitioning into online hearings, many of them for the first time.”[2] In Canada, 90% of the 44 surveyed Canadian courts reported they have heard matters deemed urgent via technology.[3]

The rapid adoption of virtual hearings has been made possible by technological evolution. Until recently, videoconferencing required expensive and dedicated hardware installed in courtrooms or conference rooms. Lately, the advent of software-based videoconferencing technology enabled the holding of videoconferencing under two conditions, (i) having a computer, smartphone, or tablet; and (ii) having access to the internet and adequate bandwidth. This technological evolution permitted to switch from technology-augmented courtrooms to virtual hearings during the pandemic.[4]

As noted by David Tait, Meredith Rossner, and Martha Mc Curdy, “Two things changed with the pandemic. Multiple participants were now ‘remote’, including the lawyers and sometimes judicial officers. There was also a dramatic growth in the use of cheaper and more accessible software-based video conferencing platforms (eg, Webex, Zoom, Skype, Kinly CVP and Microsoft Teams) and many courts used more than one platform.”[5] The Arizona Supreme Court is a good example of this phenomenon as it issued more than 200 Zoom licenses to court personnel statewide during the pandemic.[6]

(Source: WolfVision)

(Source: Zoom)

The experience of the pandemic helps to better understand how virtual hearings could be improved, and valuable lessons have been learned from this experience. An interesting effect of virtual hearings on the judicial procedure is related to the experience of court staff, lawyers, and litigants. The main issues identified by the literature are that virtual courts seem less authentic and legitimate than physical courtrooms, and that they lack bodily presence, are ‘depersonalized’, and ‘less humane’.[7] Another important effect of virtual hearings on legal proceedings is related to perception. Before the pandemic, studies showed that the interpretation of behavioral evidence is modified online.[8]

(Source: www.miamiherald.com/news/local/crime/article241708946.html)

(Source: fedcourt.cov.au/online-services/virtual-hearings)

Meredith Rossner, David Tait, and Martha Mc Curdy analyzed figures 3 and 4 above and suggested that consistent framing, lighting, and display are particularly important in a court context. The distance between the person and the camera also affects perception. One way to address these issues is to create guidelines for virtual hearings, and to create a specific software-based video-conferencing platform. However, another solution could be to investigate immersive virtual hearings, as they could better meet the expectations of litigants, court staff and lawyers.

David Tait and his team are conducting demonstration on immersive virtual hearings for several years. He defines an immersive virtual hearing as a virtual environment in which other users are seen in something approximating a courtroom – arrayed in a linear configuration rather than a gallery, supporting eye contact between participants and providing directional sound cues.

In 2018, David Tait and Vincent Tay conducted a first virtual court pilot study that operationalized some features of an immersive virtual courtroom.[9] During this study, they carried out a proof of concept with actors performing a script about a civil dispute and filmed in a green screen room:[10]

Figure 5 and 6: Virtual court proof of concept study, 2018, Photos of a film set by Vincent Tay, David Tait & Vincent Tay. (2019). Virtual Court Study: Report of a Pilot Test 2018.

(Source: https://doi.org/10.26183/5d01d1418d757)

For the proof of concept, the green background was replaced by a virtual court environment designed with architecture software, and the actors have been embedded in this virtual environment thanks to a video game engine (Unity): [11]

perspective of the tribunal member. David Tait & Vincent Tay. (2019). Virtual Court

Study: Report of a Pilot Test 2018. (Source: https://doi.org/10.26183/5d01d1418d757)

After the proof of concept, a demonstration took place in the Queensland Supreme Court (Australia) for a judicial conference. This demonstration consisted in a live mock trial using three courtrooms connected to each other. The experimental study used actors and script from the proof of concept and involved 150 lay research participants who were randomly assigned either to face to face mock trial or immersive condition. The study allowed to measure the ‘communication’ (i.e., how well other participants communicated during the trial), the ‘participation’ (i.e., how well the participants are reporting on their own experience of the process), and the ‘environment’ (i.e., how the participants are reporting on their experience of the environment) of the physical and virtual hearings and to compare them.[12] This demonstration showed that conducting justice hearings in virtual environments may have important impacts on how participants experience the quality of communication, the level of participation, and the comfort of the environment.[13] Importantly. this pilot study demonstrated the technical possibility of creating immersive virtual courts and grounded further technical tests conducted by David Tait and his team: the latest demonstrations took place in October and November 2022 at the Cyberjustice Laboratory and at the Harvard Visualization Lab, as described below.

October 2022 – Technical Test – Cyberjustice Laboratory.

For this demonstration, the virtual court platform used the games engine Unreal, and provided a view of the virtual courtroom into which the court users were inserted in the form of avatars. The platform was created by Volker Settgast, Fraunhofer Institute, in Graz, Austria. The platform consisted in presenting a hearing thanks to a game engine software, which allows experiencing an immersive feeling. The virtual courtroom was created by an architect, the actors were filmed in green screen rooms, and the scene was put together in the studio.

The participants downloaded software to run the program that was hosted on an Amazon cloud server in Frankfurt. The participants in the Cyberjustice laboratory used two PCs with curved screens, webcams, and mics, but no special graphics cards. One participant in the laboratory used another PC with a normal screen and a 3D headset. Two other participants joined the demonstration from Austria and Australia.

(Source: Cyberjustice Laboratory, Montreal)

(Source: Cyberjustice Laboratory, Montreal)

The demonstration of the virtual court platform was a success overall. The virtual courtroom looked like an Australian courtroom with participants being placed at their correct places in the courtroom. The participants were represented as avatars.

The virtual courtroom design was the same as a physical courtroom. In the virtual courtroom, the judge was sitting a little higher than other participants, as in many countries. Behind the judge, there was a window with a view on trees, as recommended by design guidelines for many recent courts.

Study: Report of a Pilot Test 2018. (Source: https://doi.org/10.26183/5d01d1418d757)

The two litigants were facing each other across the well of the court and could see the other party clearly, at an apparent distance of about three meters, and each could see the witness at an apparent distance of about two meters.

The virtual courtroom felt like a courtroom as a relatively high proportion of the screen was furniture, floor, and walls, as if we were sitting in a real courtroom. This contrasts with the view in almost all video conferencing systems where the head of the person takes almost all screen. However, some participants felt a bit more distant from the other participants compared to if they were in-person.

The avatars were visible and credible, they were considerably better than the cartoon versions often seen in games or at conferences. With the keyboard, it was possible to make the heads and lips of the avatars move, and the avatar look in the direction of the person it is interacting with. As avatars are distant from each other (as in a real courtroom), the possibility to turn the head in the direction of one participant and to move lips is already quite convincing, even if there are discrepancies between words and lip formation. The avatars could move freely in the room, and one participant even disappeared through the ceiling. The ability of avatars to move should be restricted.

The sound was of high quality during the demonstration; however, this depends on the quality of the equipment (microphone, headset, speakers) and on the distance between speakers and their microphone. As the participants were sitting alongside each other in the same room, there was a bit of feedback and echoes. There was no latency detected by the participants, and the bandwidth requirements were very low compared to a regular video call.

This demonstration allowed David Tait and his team to reflect on how to improve the software, as described below:

For future prototypes, it would be beneficial to improve the process of entering the courtroom: in the demonstration, when connecting, users are directly in the courtroom. To make the trial look more realistic, user would have to enter the room through a door and go to their place. Another improvement would be to give users, depending on their role, different options (e.g., to move, to silence someone or to allow someone to speak, etc.). It could also be interesting for users to have a choice of avatars, distinguished by profession (judges, lawyer, lay people), as well as gender (male, female, neutral), ethnicity, and maybe some other characteristics. This could be included in the entry screen. In this demonstration, participants could switch from one avatar to another at will, this should not be the case in future prototypes. Moreover, in the current version, there are no hand gestures, this could be an interesting feature to add. Also, it could be interesting to have an option for people to join as visible audience members, they could sit in the public gallery and be muted. A video streaming option for people who are not in the gallery would also be useful. It would also be helpful to have labels and name plates in front of participants, with at least the role they have (judge, defence lawyer, solicitor, prosecutor, defendant, witness, litigant, interpreter, clerk, judge’s associate, tribunal member, etc.). The judge should be able to mute and unmute participants if needed. Finally, the it would be very useful to have future prototypes working on Mac as the current one doesn’t.

November 2022 – Technical Tests – Harvard Visualization Lab and Cyberjustice Laboratory.

As for the first demonstration, the immersive virtual courtroom platform used the game engine Unreal and was created by Volker Settgast, Fraunhofer Institute, in Graz, Austria. The courtroom was in the same configuration as for the first demonstration. The program was hosted on Amazon server in Frankfurt, as for the first demonstration.

During the second demonstration, three participants joined the hearing from Canada, Austria, and Australia. The second demonstration took place in the Harvard Visualization Research Lab which is configured as a high-tech cinema with a large, curved screen and space for 20 audience members. Avatars appear life-size on the screen.

the witness position looking towards the judge.

The sound quality was very good in the theatre, however, the participants who were in Canada, Austria, and Australia reported that they received echoes of their own voices, because the theatre had no echo cancelling equipment.

The third demonstration took place at Cyberjustice Laboratory in Montreal, with the same equipment as for the first demonstration, and with two participants joining from Australia and Austria.

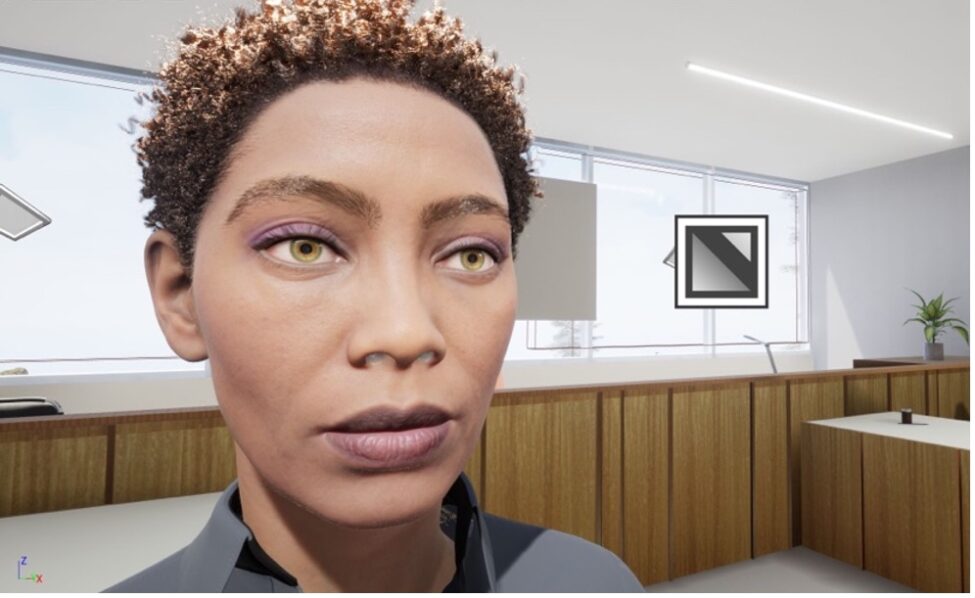

For these demonstrations, two kinds of avatars were available, the cartoon-style ones that were used in the first demonstration, and the photo-realistic ones ‘metahumans’ from the Unreal library. The photo-realistic avatars require a high-end PC and good graphic cards.

As for the first demonstration, the courtroom scene looked fairly sparse, with only a few items. Run Gant, head of Harvard Visualization Lab found the light flat and suggested capturing 3D images of some real courtrooms with Matterport to improve the virtual room.

Apart from the design of the courtroom, several issues/margins of improvement have been identified. Regarding the movement of avatars within the courtroom, it was possible to move the avatars, but they remained in a seated position and floated in the air. In the medium term, the ability to make the avatar stand up or sit down when in its place should exist. In addition, some hearings require participants to move (lawyers, prosecutor, etc.) so this feature is meant to work. Also, the distance between participants raised some concerns as the judge felt quite distant from the rest of the courtroom and appeared small compared to other participants. The head tracking appeared to work, as heads moved towards the person speaking, but it required users to use a keyboard, however, the lip movement was automated. The discrepancy between lip movement and words was noticeable on Harvard Visualization Lab big screen but was not considered a problem on the Cyberjustice Laboratory PC screens. As during the first demonstration, it was not possible to move hands. One newly identified issue is the one of turn-taking. There were occasions when people spoke at the same time, this could be avoided in the future with hand gesture or virtual hand signal or light.

For future demonstrations, it would be interesting to propose a choice of avatars. In this regard, it seems that people prefer to use avatars that provide an alternative identity in virtual worlds. It could be interesting to analyze in testing how users like to use avatars and which avatars they choose. However, avatar choice may have prejudicial effect on the perception of participants during the judicial process, thus this feature should be carefully designed. In the same spirit, it would be possible for different participants to see different courtrooms (e.g., a witness in a restful courtroom, a lawyer in a formal courtroom, etc.). These possibilities offered by the technology should be explored in future experiments. Also, it might be valuable to use several different rooms during a single process (e.g., waiting rooms, evidence display rooms, etc.). Finally, these demonstrations showed that it is essential to have a technical bailiff or room manager to run a session successfully.

[1] Online Courts Report, GBAO to National Center for State Courts, Jury Trials in a (Post) Pandemic World – National Survey Analysis (2020), https://www.ncsc.org/__data/assets/pdf_file/0006/ 41001/NCSC-Juries-Post-Pandemic-World-Survey-Analysis.pdf.

[2] OECD, Access to justice and the COVID-19 pandemic: Compendium of Country practices (2020).

[3] PUDDISTER, K. and A. SMALL, T., Trial by Zoom? The response to COVID-19 by Canada’s Courts, Cambridge University Press, 2020

[4] LEDERER, F., “The Evolving Technology-Augmented Courtroom Before, During, and After the Pandemic”, (2021) 23 Vanderbilt Journal of Entertainment and Technology Law 301

[5] ROSSNER, M., D. TAIT and M. McCURDY, “Justice Reimagined: Challenges and Opportunities with Implementing Virtual Courts”, (2021) Current Issues in Criminal Justice.

[6] ARIZONA SUPREME COURT, Post-Pandemic Recommendations, Arizona, 2021.

[7] BANDES, S. and N. FEIGENSON, “Virtual Trials: Necessity, Invention, and the Evolution of the Courtroom”, (2020) 68-5 Buffalo Law Review 1275.

[8] Tait, D. & Tay, V. (2019). Virtual Court Study: Report of a Pilot Test 2018. Western Sydney University.

[9] David Tait & Vincent Tay. (2019). Virtual Court Study: Report of a Pilot Test 2018. https://doi.org/10.26183/5d01d1418d757

[10] Ibid.

[11] Ibid.

[12] David Tait & Vincent Tay. (2019). Virtual Court Study: Report of a Pilot Test 2018. https://doi.org/10.26183/5d01d1418d757

[13] For more details about this pilot study, see David Tait & Vincent Tay. (2019). Virtual Court Study: Report of a Pilot Test 2018. https://doi.org/10.26183/5d01d1418d757

Ce contenu a été mis à jour le 14 décembre 2023 à 11 h 19 min.